Is Agile Dead?

Towards the end of 2024, the boss sent an email to all the consultants on the team asking “Is Agile Dead?”

| Ivar |

| Team, |

|

To quote Scott Ambler: “Agile isn’t dead, but the agile gold rush is, and it isn’t coming back.” Scott’s article is well worth reading, if you haven’t already done so: https://www.linkedin.com/pulse/agile-community-shat-bed-scott-ambler-1npwc/ |

|

Another article by Jürgen Apello: “Let’s celebrate twenty-five years of Agile and ten years of “Agile Is Dead” articles. Now, I won’t be one of those heroes trying to keep the brand alive. It’s time to move on.” https://www.linkedin.com/pulse/agile-undead-synthesis-jurgen-appelo-54wne/ |

| Please, let us know your thoughts. Is it time for us to move on, and in that case, what should we do? |

“Agile is Dead” headlines are largely LinkedIn content writers seeking clickbait for their own articles, but people are after all reading them, so there is definitely disquiet with the branded one-size-fits-all promises that did not really solve the client delivery issues. There are organisations that are laying off ScrumMasters and Agile Coaches, Is Agile Dead?

Of course, Agile, or some form of it, is not really dead, it is still very widely used but the gold-rush for “paint by numbers” transformations with inexperienced staff is over. I would go further and say that customers have “soured” to single approach selling as solutions to their problems.

Rather than add more click-bait to the situation, what I felt it needed was some sensible, reasoned thinking that gets beyond the observable symptoms and starts to ask “why?”

This series of posts covers:

- What Is Agility?

- What Has Changed?

- We Just Don’t See The Value

- Won’t AI Save Us?

- What Is The Problem?

Previously we explored We Just Don’t See The Value, the challenges being faced in some implementations. In this post we’ll look to the future and explore some of the challenges the industry is about to face.

Won’t AI Save Us?

For almost 200 years humanity has been progressively automating manufacturing processes for anything that is produced in volume; the human input is often maintenance of the machinery rather than the manufacturing operations themselves.

Within the last 150 years humanity has been codifying and tabulating information and apply algorithms to process that information. The early computers were human, buried in the hearts of banks and insurance companies calculating how much the customer owed, or were owed by the company. First mechanical, then silicon, computers took over the calculations. They performed the calculations more efficiently and more accurately than their human equivalents but were less adaptable, the bank’s managers couldn’t just shout “tax rate has changed to 20%”, they needed to employ a small army of engineers to make the change. The workforce shifted from doing the activity directly to supporting the machinery doing the activity.

About 50 years ago, they started to connect the computers together so that they could share information, this kept going, more and more computers connected together and the Internet was born. Computers got cheaper, smaller, you could run them off of a battery, you could put them in your pocket, you could have them permanently connected to the rest of the computers in the world, and so the Mobile Phone revolution became the Smart Phone revolution. More and more data was being generated at an ever-faster pace, computers could be trained to spot patterns in the data, to identify interesting features not immediately discernible to the human eye. Machine learning came into being.

Then someone realised that the process could be run in reverse, work backwards from those patterns, and generate a set of inputs that would give the desired output, Generative AI was born.

What’s left once the AI is running the processes?

Western society is already struggling with the challenge that there are not the same numbers of skilled physical jobs that there were in the past, machinery and automation have taken over.

AI is coming for the information processing jobs: taking information in, processing it, interpreting it and then passing the results on. Automation and Machine Learning are handling more and more of the processing and interpretation.

AI is coming for mundane creativity, writing words that are variants on things that have been written hundreds of times before. The Generative AI can collage things together from the hundred times before.

Just like the mechanical revolution that came before, the shift will be from doing the work to maintaining the machinery, the Automation, the Machine Learning systems, the Generative AIs, that do the actual work.

True creativity

Generative AI can only collage together an output from information that it has already seen, it is not creating anything new, it’s pasting together historic information. This particular collage of information might look new, because it has never been seen in this form before, but it will always be constrained by the set of information that the AI has been trained on.

Generative AI can only collage together an output from information that it has already seen, it is not creating anything new, it’s pasting together historic information. This particular collage of information might look new, because it has never been seen in this form before, but it will always be constrained by the set of information that the AI has been trained on.

To push the boundaries, to do what has never been done before, requires true creativity. That mythical spark of an idea, the lightbulb moment. This could be engineering creativity; it could be artistic creativity. That still requires human creativity and ingenuity, it cannot be done through a collage of existing material.

It doesn’t even have to be novel and inventive; trying to create insight on something that is so new that data about it isn’t held within the Generative AI’s dataset is going to result in the Generative AI’s hallucinating or mis-reporting. BBC News studied the Representation of BBC News content in AI Assistants and concluded that the range of errors introduced by AI assistants is wider than just factual inaccuracies, the AI struggled to differentiate between opinion and fact, editorialised, and often failed to include essential context.

Problem Solving & The Truth

|

Reality? Your “reality”, sir, is lies and balderdash, and I’m delighted to say that I have no grasp of it whatsoever. |

|

Baron Munchausen, The Adventures of Baron Munchausen

|

Why is there a mismatch between the AI response and reality?

Why is there a mismatch between the AI response and reality?

At some point the AI will be wrong compared to what an external protagonist is expecting. Is it the inputs? Is it the internal logic? Someone will need to investigate. The AI believes that they are correct, the external protagonist believes that they are correct, there needs to be a knowledgeable third party that can determine where the truth lies.

The Generative AI creates outputs that “look right,” which isn’t the same as “are right”. Pose the Generative AI the challenge of creating a poem about roses in the style of Shakespeare and it will probably manage something that looks pretty convincing, ask it to provide the exact poem that Shakespeare wrote about roses, and it can struggle1.

Philosophical question: How do you know that something is the truth?

Evidence, physical proof. Collective agreement that something is the truth. Both are activities that exist outside of the realm of the Generative AI, external assessment that at the moment needs to be performed by humans.

Determining the truth is problem-solving, it is non-deterministic. Whilst there can be guidelines for how someone should go about determining the truth, that guidance to steer them in the right direction, the detail has to be uncovered as they go. Each time the truth needs to be determined it will be a unique situation.

Agility handles the unknown

Agility will be needed more than ever in an AI powered future.

Creativity is the unknown, you cannot predict it. It is experimentation. If you want to manage Creativity, you’ll need Agility.

Problem Solving is the unknown; if we knew it it would not be a problem! Hard to predict. If you want to manage Problem Solving, you’ll need Agility.

Slave To The Machine

Won’t AI Help Agile Coaches?

Maybe, but it might also replace you!

Why bother hiring an expensive Agile Coach when you can just ask the AI what to do?

Figure 1: The Agile Methodology Wars Have Ended

Why bother hiring an expensive Agile Coach when you can just ask the AI what to do? One AI instance can easily serve hundreds of people.

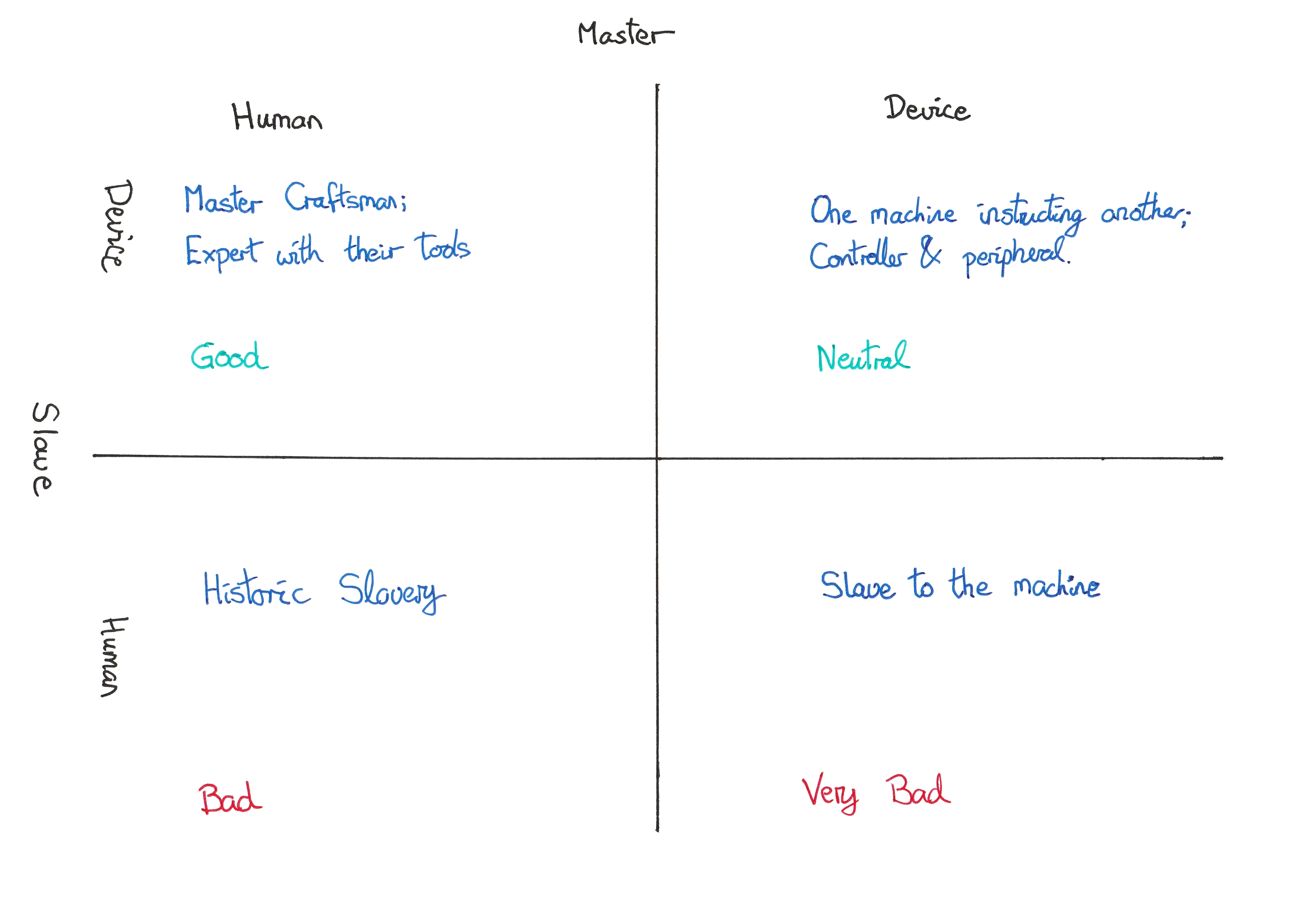

It’s a potentially contentious topic; but we need to talk about Master & Slave relationships

Figure 2: Master Slave Relationships between Humans and Devices

- Human over Device – Master craftsman, expert with their tools. Good.

- Device over Device – One machine instructing another, e.g. controller and peripheral. Netural, the devices don’t care why should we care.

- Human over Human – Historic slavery. Bad2.

- Device over Human – Slave to the machine. Very Bad.

The machines are devoid of empathy, they are not capable of empathy. Humans may be devoid of empathy, but they are capable of having empathy.

Why is this a problem? Humans have been part of human / mechanical processes for hundreds of years, the industrial revolution that began in the early 18th century3 as people moved from agricultural work to manning production lines in factories.

Complexity domains again; historic human / mechanical interaction where the machinery is in control has always been in the Clear and Complicated space, deterministic situations where instructions can be written for how the interaction occurs. But, Complex and Chaos domains are non-deterministic, how will the devices cope when the problem solving results in a negative answer? What does someone, or something, with no empathy do when it doesn’t receive the expected result?

One False Optimisation Away From Disaster

Agility is just a few careless optimisations aware from disaster. Optimisations that look logical on paper, but are localised optimisations that haven’t considered the system as a whole. I was on a call where someone was very excited about this new tool that they had developed where the AI could tell organisations how to arrange their teams and teams-of-teams. It would save countless hours of time and effort in workshops dedicated to team structures and consequently save thousands of dollars, just enter your details and it will tell you exactly what you should do.

I was still thinking about the tool a week later whilst running a team structure workshop for a client. The further I got into the workshop, the more that I realised that the Team Organising Tool had ignored one major element: the human element. As much as the workshop is about setting up the team structures, it is also about getting agreement from the participants that those team structures are the right team structures. A tool that dictates the structures to you won’t get that agreement, the people in the teams will reject the structure and the organisation struggles because it hasn’t addressed the human factors.

This is not a new problem; most people have seen, or been present, in situations where team structures have been imposed upon the workforce by the management without any form of consultation. Consequently the organisation suffers as a result. The tooling just allows an organisation to break itself in half the time and at a fraction of the cost, but ultimately the organisation is still broken.

Brighter minds than I have been thinking about the effects of the application of AI as well. The sample set is a bit small to be truely indicative, but researchers at Microsoft have been studying The Impact of Generative AI on Critical Thinking; if people don’t have to think for themselves then when they need to they struggle because they haven’t been practicing those thought processes.

The mechanisation of routine information processing has the potential to bring many benefits but used carelessly without thought it will cause more harm that the good it hoped to bring.

There is a reason that most “advanced” Agile courses are not about doing the processes ever more effectively, but are about how to deal with the people. Dealing with conflict, building alignment, creating the environment for collaboration, ultimately in the hope of getting high performing teams. Skills that can only really be gained through experience and practice; classrooms and book learning are a starting point but not a substitute for practical experience.

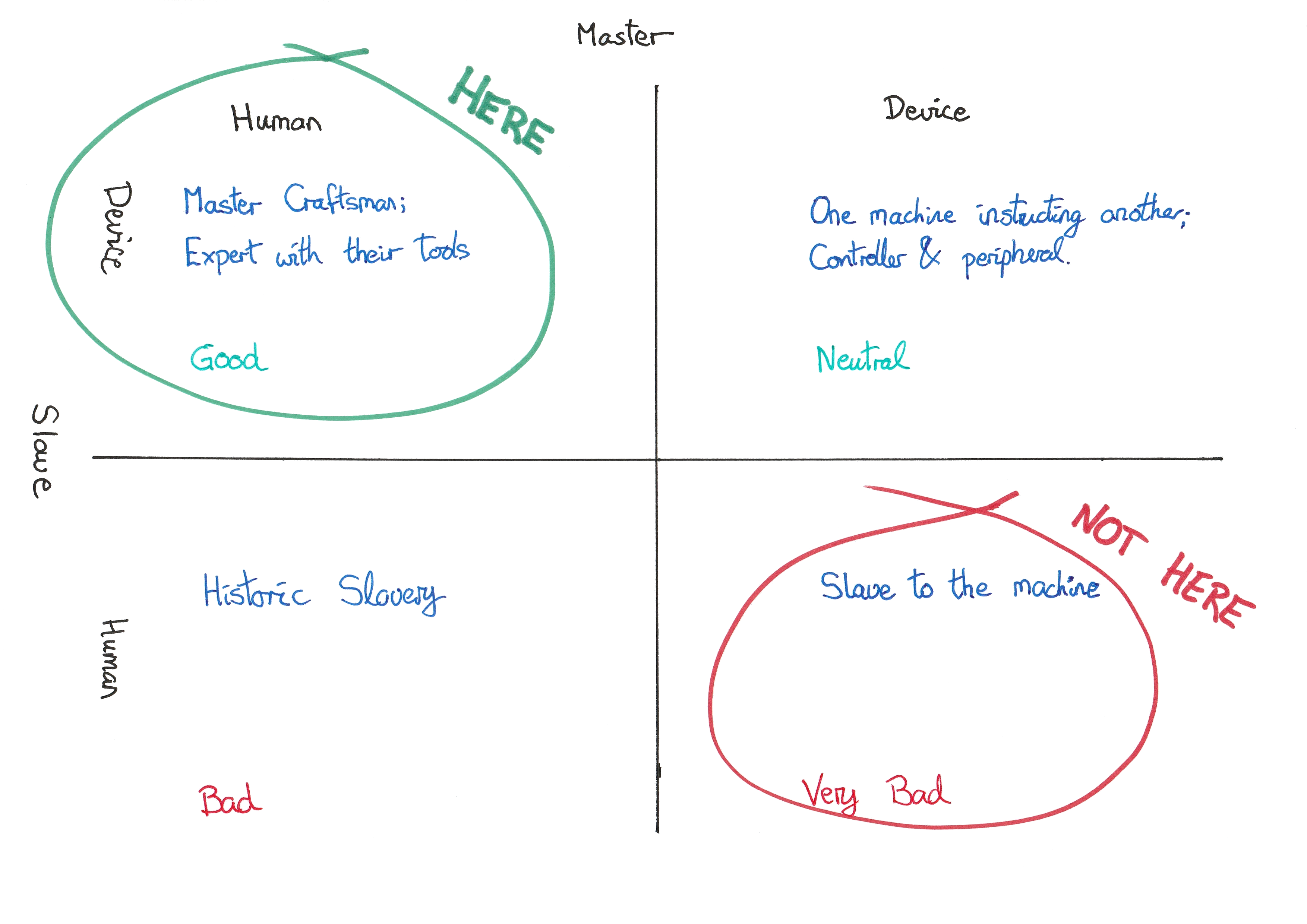

Figure 3: Desired positioning with respect to the Master Slave Relationships between Humans and Devices

I’m consistently drawn back to the Agile Manifesto and it’s first value: Individuals and Interactions over Processes and Tools. Used wrongly the aforementioned tool could be a false optimisation because it ignores the Individuals and Interactions which is the real reason we’re here. Be carefull of localised optimisations; you might perceive yourself to have mastery of the tool but if you use the results of the tool to impose upon other people without engaging them in the process, then you’ve carelessly optimised towards the Devices over Humans; it’s erroneously become Tools and Processes over Individuals and Interactions.

It doesn’t matter how quickly or cheaply you do the wrong thing,

you’ve still done the wrong thing!

Next

Dealing with AI is going to require industry to be more Agile than ever because, once the AI is doing the mundane procedural information processing, what is left is creativity and problem solving.

Following Design-Thinking’s teaching of “diverge to explore the problem space”, now it is necessary to start converging. Therefore, in the next post we loop right back to the beginning and ask What Is The Problem?

|

#1 Often if you ask for an exact thing, the systems are hardcoded to go off and retrieve that exact thing rather than relying on the neural networks to recall the thing in it’s entirety. I.e. the AI’s cheat, they copy the answer from the book rather than actually remembering it. Remarkably human in many respects; although they were set up by humans, so what did you expect! [Return]

#2 Slightly less bad if you have some autonomy and are being recompensed, i.e. a contractual relationship of employment, but only slightly less bad. Someone somewhere is probably trying to exploit you. [Return]

#3 Loombe’s Mill, 1704, on the River Derwent in Derby, England. [Return]

|

Without wanting to fan the flames of more click-bait, I hope the above has provided more insight into: Is Agile Dead?